Physics- and statistics-based earthquake forecasting

Current state-of-the-art

In most regions and countries worldwide, seismic hazard assessment is based on time-independent hazard models. This means that the probability of an earthquake to occur is assumed to be the same today, tomorrow, or in a year from now, irrespective of fluctuations in the time of seismic activity. There is, however, a clear trend towards the use of time-dependent models for Operational Earthquake Forecasting (OEF) systems, as in the United States [1], in Italy [2], and New Zealand [3]. One thing that is common to all three examples given here is that all of them are in one way or another based on the Epidemic-Type Aftershock Sequence (ETAS) model [4].

ETAS is currently the most widely accepted state-of-the-art when it comes to time-dependent earthquake forecasting. This model is based on a few simple assumptions and well-established empirical principles about earthquakes’ triggering behaviour. In ETAS, earthquakes can either be “background” events, or “triggered” events, and all of them can trigger cascades of aftershocks, following triggering laws, such as the Omori-Utsu law for the temporal distribution of aftershocks or the Gutenberg-Richter law for the frequency distribution of earthquake magnitudes. While it has been around for many years, ETAS hits a sweet spot between simplicity and accuracy, which makes it difficult for newer models to establish themselves as the new state-of-the-art.

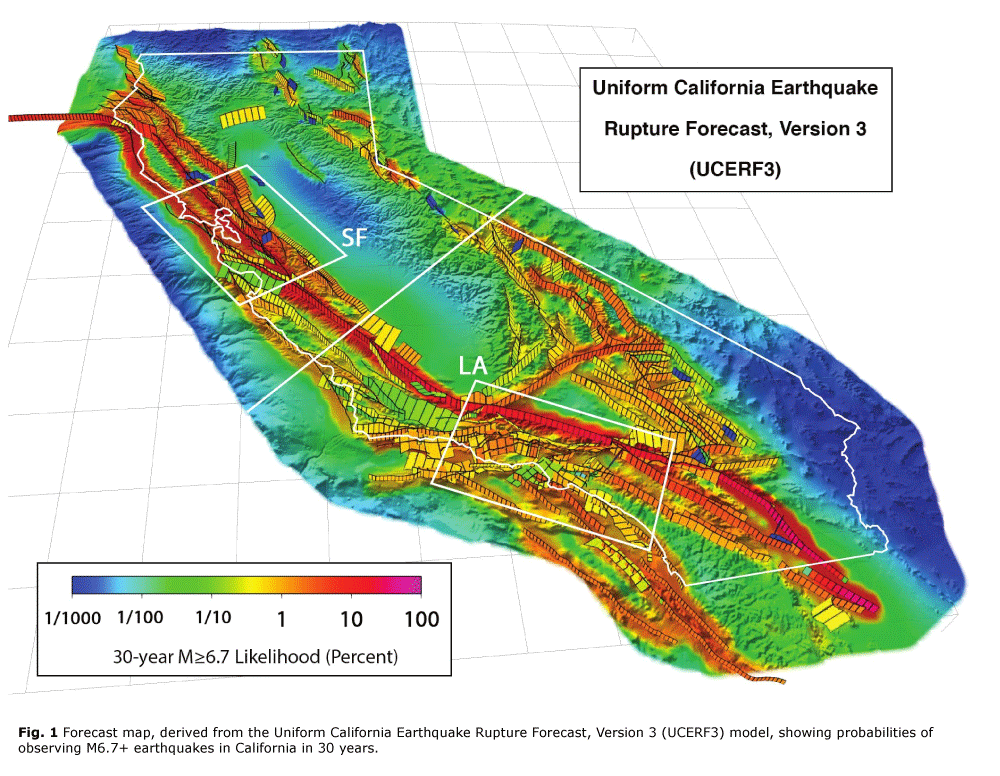

Moving from simplicity towards more physics-based accuracy, one of the most advanced forecasting models today is the Uniform California Earthquake Rupture Forecast, Version 3 (UCERF3, [1]) model. UCERF3 combines the simple yet effective nature of ETAS with fault-specific rupture forecasts, based on the elastic rebound theory by Reid [5]. A major difference between the two approaches is that, in the Reid formulation, the location of a recent rupture is likely to rupture again soon after a mainshock, while in ETAS the location of the mainshock is the location where aftershocks are most likely to occur. Combining the two approaches is possible in UCERF3 due to the availability of high-quality seismic, geological, and geodetic datasets, as well as ongoing research for many years on the seismotectonics of the area.

Citation

Mizrahi, Leila, Savran, William H., & Bayona, José A. (2022). Good practice report: new developments in physics- and statistics-based earthquake forecasting. Zenodo. https://doi.org/10.5281/zenodo.8074963

Reviewer

Stefan Wiemer

Expectations for the future

New developments in physics- and statistics-based earthquake forecasting are being made in various directions. The availability of geophysical datasets, including high-resolution earthquake catalogues, fault information, or interseismic strain data, has increased in recent years due to a combination of better instruments, novel data processing techniques, and more powerful computational capabilities. Such datasets enable the development of a variety of physics- and statistics-based earthquake forecasting models. Relevant developments in long-term forecasting have been made by Bird and Kreemer [6] with a model based on the Global Strain Rate Map, Version 2.1 (GSRM2.1, [7]). Bayliss et al. [8] described a frame-work that allows to identify model components that are most beneficial for describing time-independent spatial patterns of seismicity.

In addition to developments in time-independent forecasting, several approaches to model time-dependent seismicity based on earthquake physics knowledge that goes beyond the simple ETAS formulation have been proposed recently. Instead of representing each earthquake as a point source, one can use information about the actual geometry of a fault and the movement of rocks that occurred during the earthquake to describe its nucleation process. This information together with an understanding of how stress is transferred in the Earth’s crust to make aftershocks in certain areas more or less likely than prior to a large earthquake is used, for example, in elastic Coulomb rate‐and‐state aftershock forecasts by Mancini et al. [9]. Although such a model is highly innovative and promising, it has not yet been able to outperform a standard ETAS model. Similarly, a suite of physics-based forecasting models has been evaluated against ETAS by Cattania et al. [10], with the relatively sobering outcome that their performance is at best comparable to ETAS’s performance.

While the big breakthrough is yet outstanding, our increasing computational capacities as well as the continuing creativity of researchers worldwide are painting a bright future [11]: New models can be tested more and more efficiently ([12] testing several versions of ETAS based on millions of simulated earthquake catalogues), or the same models can be applied to larger and thus more representative datasets [13]. The discrepancy between forecast and reality can be related to variables that are not yet used in existing models [14]. Different existing models can be combined into new models by means of ensemble forecasting [15], or entirely new models can be built by borrowing tools from other fields such as ma-chine learning (e.g. neural Hawkes processes, [16]). And although most models proposed thus far provide rather incremental improvements, if any, to the long-standing state-of-the-art that is ETAS, these findings provide highly valuable guidance as to which paths are most promising to take in the search of next-generation earthquake forecasting models.

The role of RISE

The development and testing of earthquake forecasting models are integral parts of the RISE agenda. WP2 contributes by enabling innovation in observational seismology which will produce useful datasets to be used by earthquake forecasters, and by seeking earthquake precursors in big-data applications. Even more directly related to the topic are the goals and deliverables of WP3. In summary, the goal of WP3 is to advance OEF, with more detailed goals that tap into the aspects mentioned earlier on: knowledge-transfer from other disciplines, the development of next-generation physics-based, stochastic, and hybrid forecasting models, the development of workflows to integrate OEF knowledge to dynamic risk assess-ment, and a general improvement of the understanding of the processes involved in earthquake occurrence.

An important aspect in the development of earthquake forecasting models is one that may seem secondary at first, but in fact plays a crucial role: RISE workpackage 7 addresses testing, comparison, and validation of forecasting models in a fully prospective mode (i.e., against future earthquakes). Only through an established consensus on how forecasts should be tested and compared to each other, it becomes possible to systematically advance earthquake predictability. It is the core goal of WP7 to transform forecast testing and evaluation through the Collaboratory for the Study of Earthquake Predictability (CSEP [17]). With the release of the open-source toolkit pyCSEP [18], uniform testing metrics and tools have been made accessible to everyone. As another RISE activity, two fully prospective forecasting experiments for Italy and the globe are starting shortly, where RISE members’ forecasting models can be evaluated and compared transparently. This not only motivates participants to develop successful models, but sets a standard for the selection of the most appropriate models to be used for OEF in Italy, and benchmark models for the entire world.

Because the study of “New Developments in Physics- and Statistics-based Earthquake Forecasting” plays such a pivotal role in RISE, a technical session on this topic, organised by RISE members, is being held at the 2022 annual meeting of the Seismological Society of America (SSA2022). This session will be dedicated to discussing new observables, hypotheses and methods that could improve our understanding of earthquakes.